Which now as I type that makes me think this could actually be done server side given all of our servers have access to wget. Hmmm…

Ok so wget is actually really good and opens up some interesting possibilities here. I tried the command from that linked guide

wget -mkEpnp https://blog.timowens.io

when logged in via ssh to my Reclaim Hosting account and it generated a folder named blog.timowens.io with everything inside it. It took 5 minutes and 43 seconds to download 55Mb of stuff and convert the links in all of the pages to local relative ones. I uploaded it to Amazon S3 which can do static file hosting so that folks could see the result.

I could see combining wget with s3cmd and automating this whole thing which would be really interesting. If nothing else it would also just be crazy cool to be able to have a cPanel app that could take a URL as input and throw a folder in your hosting account with a static archive.

I wish to have that.

That is so awesome! How cool, so something like this could possibly be automated on Reclaim?

Seems like it could be a plugin . . . for my scenario that’d be crazy slick.

It actually seems pretty straightforward (in my head anyway). Just need one user credential page for the S3 stuff.

Making this even more interesting, I tested the wget functionality with a local hosts file and was able to archive a site on a server for which the domain expired almost a year ago. Damn that’s cool.

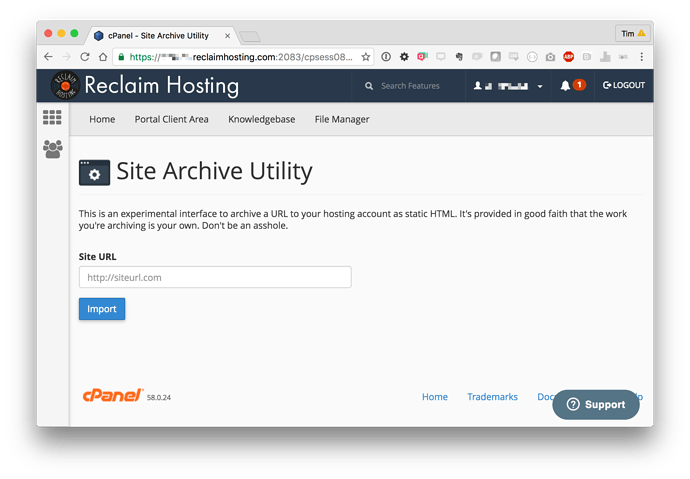

I’ve started building the plugin this could become, just a dummy interface for now. I’ll probably start with a basic “Give me a URL and the folder location you want to save to” and then once that’s working we can look at fancier options like scheduled archive, S3 and other remote archives, etc.

Keeps getting better and better.

If you want to make sure people are archiving only their hosted sites, I wonder if you could do something like the way Google does site verification - generate a file with some kind of hashed name/code in it that has to be loaded at the root level of the server. The script could then do some kind of verification to make sure the person is only archiving a site they manage

I was interested in Boris Mann’s idea on Twitter of this method losing metadata:

Would love if he expounded a bit on that, he’s the one who was pointing to the new hackstack before it became all the rage ![]()

Hey all. If you take a database backed site like Wordpress or Drupal and archive it to “flat” HTML, you take a one way trip to losing all metadata.

What I mean by that is, information about posts and pages like date created, author, tags, categories, etc.

Especially for large archives, it means you can’t easily remix the site content again.

I’ve been using Jekyll, a static site generator, for this same purpose. Exporting to Jekyll means individual posts or pages are exported into HTML / markdown, with YAML front matter that contains this metadata.

That last bit was a bit gibberish if you haven’t played with Jekyll yet. There is a block of text at the top of each text file that has author, tags, etc.

The downside to exporting to Jekyll is that it doesn’t preserve the theme (because it’s saving the content, not the presentation layer). And, that it’s learning a little bit of Jekyll.

There is a WP plugin for Jekyll exports: Jekyll Exporter – WordPress plugin | WordPress.org English (Canada)

Haven’t tried it. Here at Reclaim, you might run a global instance of Jekyll in order to generate the flat HTML.

More complicated? Yes. I’m a big fan of GitHub Pages, where every site automatically runs Jekyll and does free hosting including domain names.

Hope that helps explain what I mean.

Appreciate the clarification and that’s a fair point, switching to a different CMS like Jekyll is definitely more flexible if you want to be able to reuse the content again in another context versus simply archiving it. (I also like @cogdog’s idea of simply keeping a dormant copy of the database or SQL backup in case you want to revert). But if the goal is to actually archive I’m not sure I agree the metadata is lost. Look at Investing in Community as an example. Viewing the source and looking at the post itself all the tags and other information are completely intact. You’re right that I can’t turn this into anything else, but as an archival method it still seems to me like a really nice option. I suppose with any archiving methodology though the rule of thumb is to have a variety of formats to support longevity.

yep, wget has been my go to UNIX utility for years. You can run it on Mac, Win, Linux pretty much anything. I use it to crawl websites and create a local mirror of them getting all related files. Since web apps hide the server side code and just deliver the HTML to the browser, that’s all wget sees so you end up with a local html archive.

We can argue and say “easily parseable” or “documented” metadata. The markdown files are more like a DB backup.

Those markdown files are easier to re-hydrate than HTML, which you have to write a custom parser for.

Small sites: not a big deal. Big sites: big deal.

I had not thought of the metadata issues. Like Tim suggested, the 2 small to puny sites I did are purely to preserve the sites as they were presented. The chances of them ever being needed again are on the order of me going on a trip to Mars.

But a slap on the head thing I should have done before the decommissioning Wordpress front end would have been to export the site- at least I would have the posts and meta data as XML. Someone (aka not me) could probably figure a way to do some kind of meta data export as JSON?

It’s pretty exciting to see how a thread like this rolls into a big ball of possibility.

I think I’m with you @timmmmyboy on this. Use cases I’m thinking for this are: 1. A WP site is created to facilitate students writing as part of a course assignment (write-in-public type thing) for course x in semester y - prob with a sub-domain or sub-blog on a multi-site. For various reasons, we want/need to create a diff Wp site for the similar assignment for course x in semester y+1. Once semester y is finished, I want students & others to be able to always view the site on Web and have links to it continue to work. But there will not be any editing or changing of anything on that site. I don’t want to keep it in a live WP install since that’s work to maintain & protect. If the metadata can be found out, that’s OK. Don’t need to ever reconstitute the site as a live WP install. OR 2. student has a blog on a school’s WP multisite (think Rampages). After student grads and is gone but hasn’t/doesn’t want to migrate it to their own Domain somewhere, would like to keep a static version on public web but not allow editing.

Yes, Wordpress XML contains all the metadata. It’s another example of “easily parseable” metadata. There are Jekyll importers, for example, that can read in WP XMLand reconstitute a site.

Definitely agree there. I think they’re separate use cases but important for people thinking about archiving to consider as a backup plan.

Where I think this does get tricky from an ethical standpoint is when you’re archiving work that is not your own. Just because we can scrape, well the question is should we. For personal stuff absolutely. I’m less clear on the implications of things like the work of students who have already graduated @econproph (recognizing we already do this through large Multisite systems with blogs that tend to stick around after a student leaves). I suppose a request to remove content is easy when the student’s work is the entire site like a blog in a multisite. When it’s a scattering of posts throughout a site with multiple authors that is going to be almost impossible without first reconstructing the site back into something dynamic so I think a backup plan like those proposed is a good practice even if one doesn’t expect to ever need to use it.

On one hand, the whole point of having students have their own space is so that they are in control. But, if you post something publicly on the internet, it’s public. It can end up in the Way Back Machine, or archived in Google indefinitely. Works that are private and part of a class come under FERPA guidelines.

When I was teaching with Discourse, I tried hard to encourage students to always refer to each other by @usernames rather than their names. This would make it easier to reliably anonymize data by replacing @usernames with pseudonyms if the discussions were to be analyzed for resesarch. (Sure, that’ll never happen, but my undergrad degree was in CS).

Just wanted to chime in on this thread as I’ve spent years archiving public content of faculty curricular WordPress sites using wget and SiteSucker. I’m finally getting very close to automated archives by scripting HTTrack. Once you get it up and running and figure out all the flags you need this is the fastest way I’ve found to do a full scrape while converting to relative urls.

Very interested in what you’re describing. That’s essentially my use case: curricular sites that need to be viewable but will not have content added after some semester.

I was just having a conversation about this very approach for archiving a domain project, or archiving a university’s old tilde space servers. I would love to know more!